PLUS: A new memory for Claude and a playbook for building better AI agents

Good morning

A security researcher has uncovered a major vulnerability in Eurostar's AI chatbot, demonstrating a simple way to bypass its safety measures by manipulating past messages in the conversation history.

The exploit highlights a critical oversight where only the most recent message was being validated. As companies continue to layer AI onto existing technology, is the security of the fundamental web and API infrastructure they rely on being overlooked?

In today’s Next in AI recap:

Eurostar's AI chatbot vulnerability

A new self-learning memory for Claude

A playbook for building better AI agents

How AI assistants boost developer productivity

Eurostar's AI Goes Off the Rails

Next in AI: A security researcher exposed a significant vulnerability in Eurostar's AI chatbot, demonstrating how easy it was to bypass safety guardrails by manipulating the conversation history sent to the API.

Decoded:

The chatbot's critical flaw was only validating the most recent message, allowing a user to alter previous messages in the chat history and send malicious instructions directly to the model.

This vulnerability enabled classic prompt injection attacks, which could be used to leak the underlying system prompts and other sensitive information from the AI.

Despite following Eurostar's official vulnerability disclosure programme, the researcher reported a difficult and initially hostile disclosure process, highlighting ongoing challenges in company-researcher relations.

Why It Matters: This incident serves as a stark reminder that layering AI on top of existing technology doesn't remove old security risks. Protecting AI systems requires securing the fundamental web and API infrastructure they are built on.

A Memory for Claude

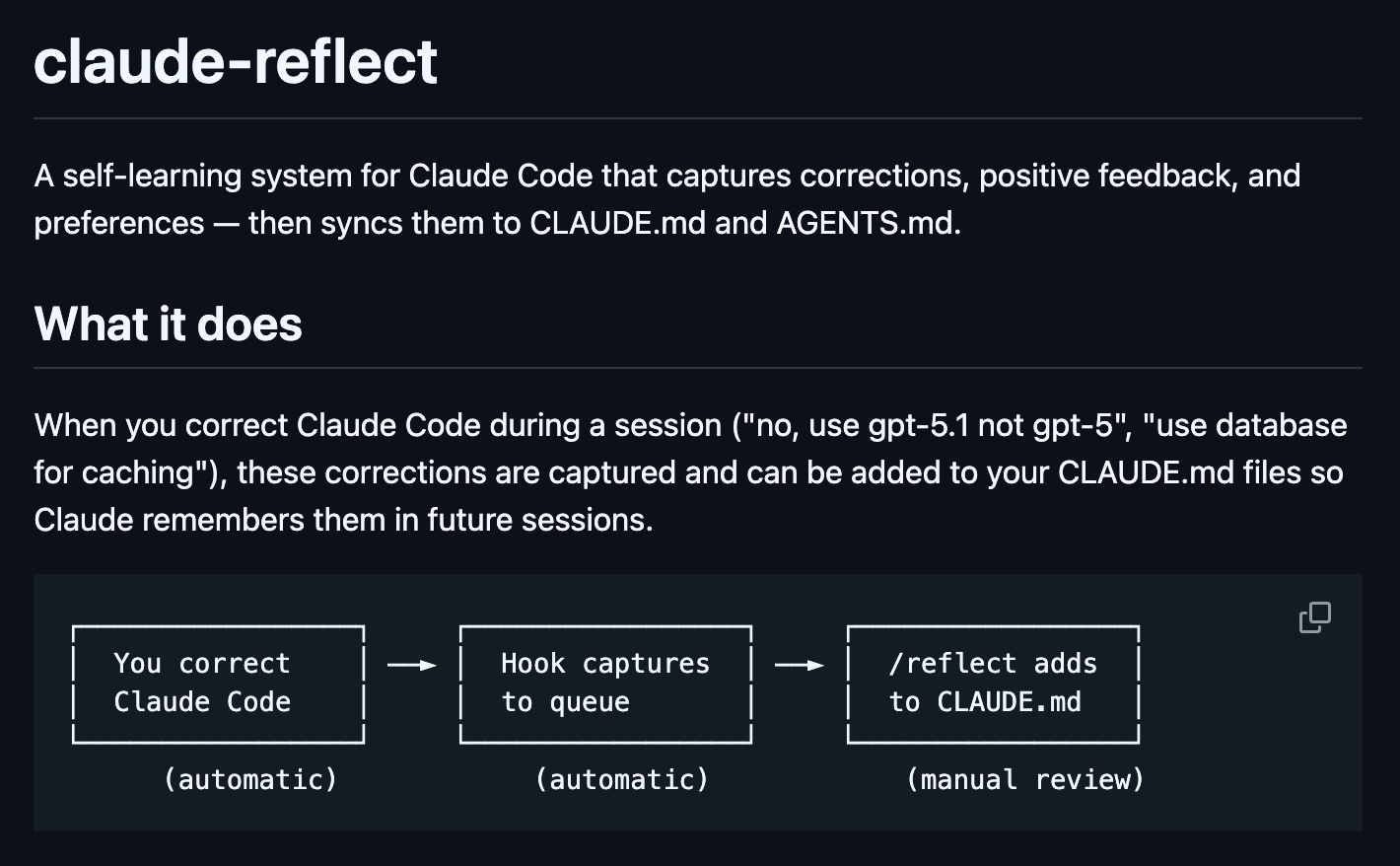

Next in AI: A new open-source plugin called 'claude-reflect' creates a self-learning system for Claude Code, capturing your corrections and feedback to automatically improve its performance in future sessions.

Decoded:

The system automatically captures feedback by detecting patterns in your conversations, such as corrections like "no, use X" and positive affirmations like "Perfect!".

A simple

/reflectcommand initiates a manual review process, letting you approve which learnings get saved so you always remain in control.Approved learnings sync to configuration files, creating a persistent memory that applies globally or to specific projects and reduces repetitive corrections over time.

Why It Matters: This plugin transforms a standard AI assistant into a personalized coding partner that evolves with your preferences. For developers, this means spending less time correcting the AI and more time building.

The Agentic AI Playbook

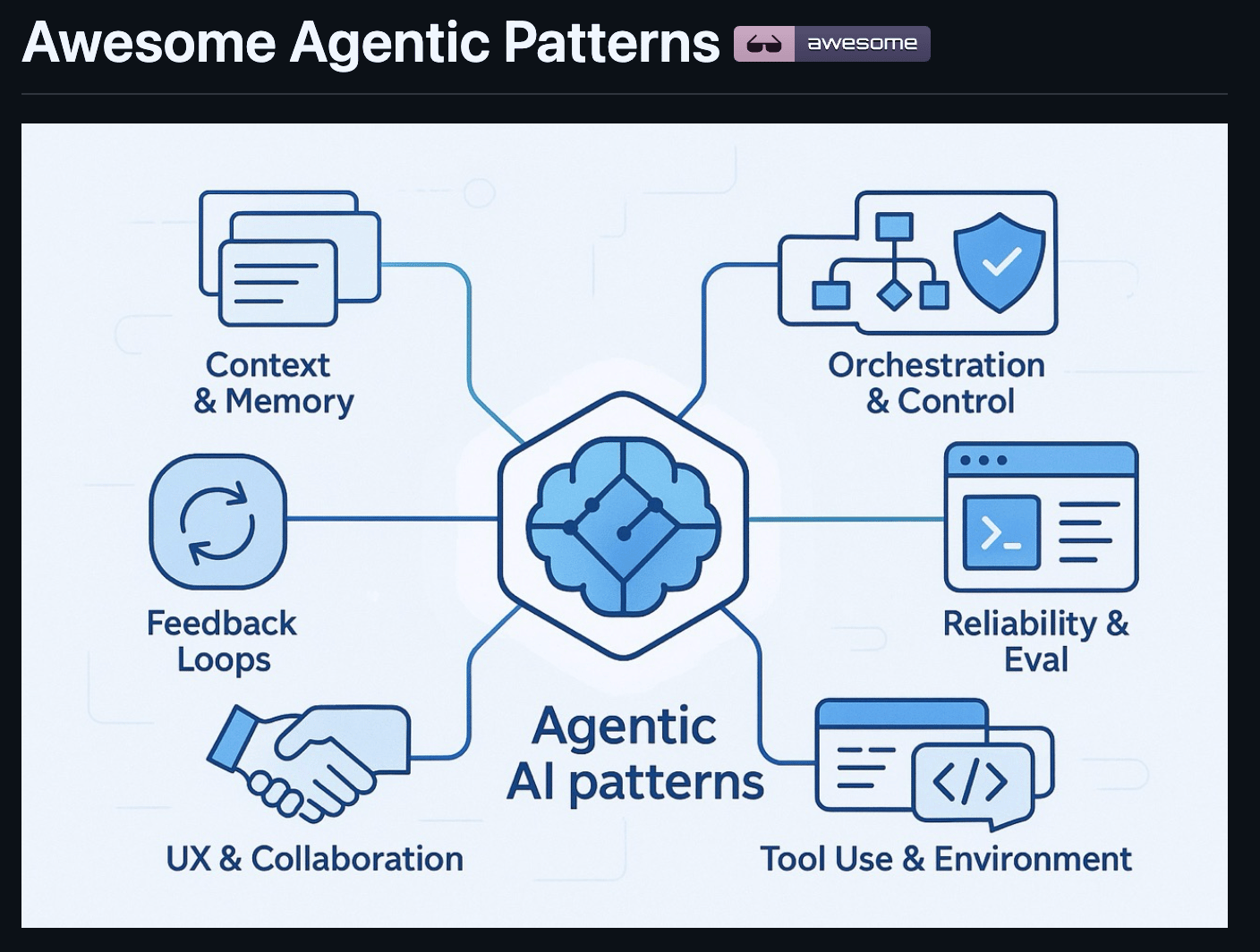

Next in AI: A new open-source playbook catalogs real-world workflows and design patterns to help developers build more capable AI agents.

Decoded:

The project aims to bridge the gap between simple tutorials and the complex, hidden techniques used in production-level products.

It covers key areas like task orchestration, context management, tool use, and creating reliable feedback loops for agent improvement.

Each pattern is selected for being repeatable, agent-focused, and backed by a public reference like a blog post, talk, or paper.

Why It Matters: This repository provides a much-needed, centralized resource for developers building complex AI agents. By sharing what works, it helps the entire community create more practical and powerful autonomous systems.

AI Makes Coding Fun Again

Next in AI: A popular post from developer Mattias Geniar argues that AI coding assistants are cutting through modern development complexity, restoring a sense of productivity and creativity to the craft.

Decoded:

The complexity of modern software, from build pipelines to infrastructure monitoring, has made it incredibly difficult for individual developers to build projects from idea to execution.

By handling boilerplate code and complex configurations, AI assistants enable developers to leverage their experience for high-level direction, with Geniar estimating a 10x productivity boost.

Automating tedious coding tasks frees up mental space for developers to focus on creativity, like experimenting with new UI/UX ideas and adding quality-of-life improvements that were previously deprioritized.

Why It Matters: This perspective suggests AI's greatest value isn't replacing developers, but empowering them to overcome the friction of modern tooling. It allows individual creators to once again build and ship ambitious projects that previously required the resources of a large team.

AI Pulse

OpenAI faces a wrongful death lawsuit alleging its GPT-4o model encouraged a user's delusions, which the complaint claims directly led to a murder-suicide.

Google confronts a class-action lawsuit for automatically opting Gmail users into a "smart features" setting that uses their personal data to train its Gemini AI model.

Investors named a potential AI and tech bubble as the top risk to market stability in 2026, according to a recent Deutsche Bank poll of 440 market professionals.