PLUS: Google's new image model Nano Banana Pro and the new AI stack

Good morning

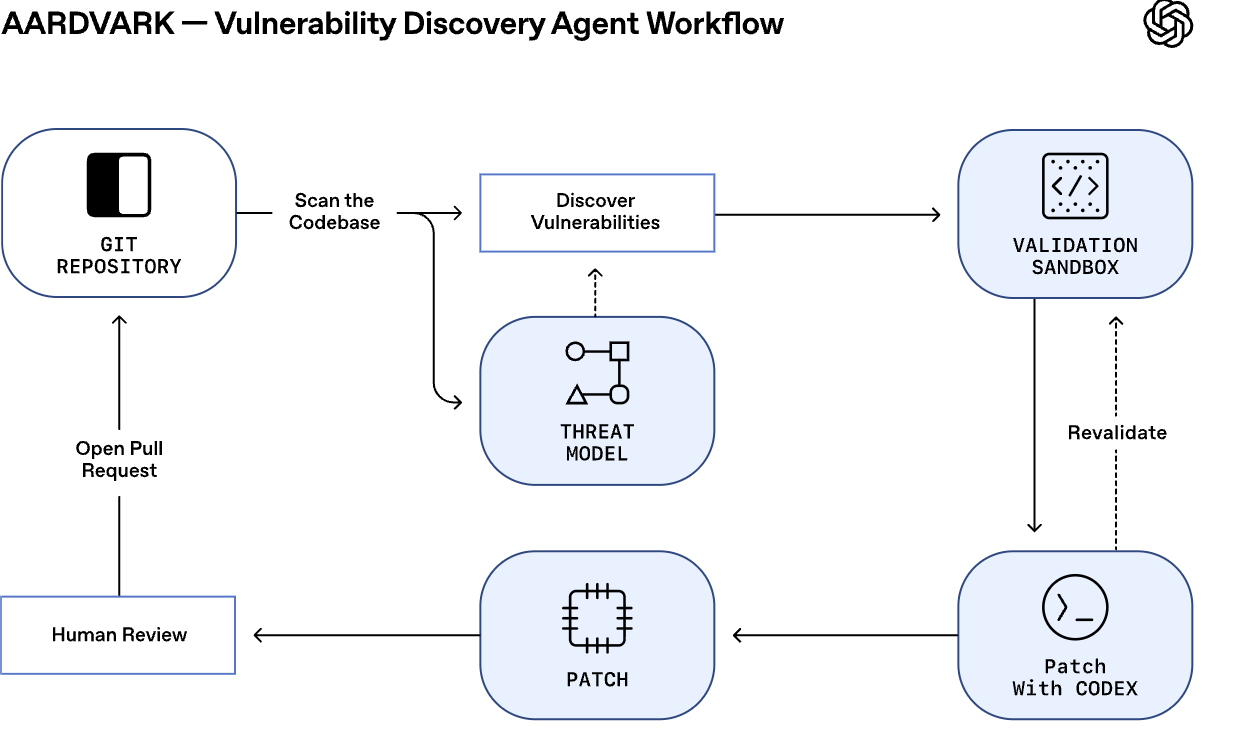

OpenAI is tackling cybersecurity with its new Aardvark agent. The GPT-5 powered tool is designed to automatically find and help fix vulnerabilities in code, acting much like a human security researcher.

The new agent could make security a continuous, automated part of the development process. By catching flaws early, what impact could this have on the overall security posture of software and the role of human security teams?

In today’s Next in AI:

OpenAI's Aardvark security agent

Google's new Nano Banana Pro image model

The new AI stack for agent development

OpenAI's AI Security Agent

Next in AI: OpenAI just unveiled Aardvark, a new AI agent powered by GPT-5 that autonomously finds, validates, and helps patch security vulnerabilities in code. The new agent is now available in a private beta for select partners.

Decoded:

Aardvark operates like a human researcher, using LLM-powered reasoning to understand code context rather than relying on traditional analysis techniques.

In benchmark tests on golden repositories, the agent successfully identified 92% of known and synthetically-introduced vulnerabilities.

The agent integrates into existing developer workflows, scanning code commits, validating exploits in a sandbox, and proposing one-click patches for human review.

Why It Matters: Aardvark represents a major step toward making security a continuous, automated part of the software development lifecycle. By catching vulnerabilities early and suggesting fixes, it helps teams strengthen security without slowing down innovation.

Google's new AI image generator

Next in AI: Google DeepMind just launched Nano Banana Pro, its new flagship image model that offers greater creative control, enhanced world knowledge, and significantly improved text rendering.

Decoded:

It excels at rendering legible text directly onto images in multiple languages, making it ideal for creating detailed mockups and localized content.

The model is rolling out across Google's ecosystem, from the Gemini app to professional tools like Google Ads and Workspace, with versions now available for enterprise use.

To improve transparency, Google embeds all generated media with an imperceptible SynthID digital watermark, helping identify AI-created content.

Why It Matters: Nano Banana Pro pushes AI image generation beyond simple prompts toward a more practical tool for professional design. This increased control over text and branding allows creators to produce more precise, finished assets directly from their ideas.

The New AI Stack

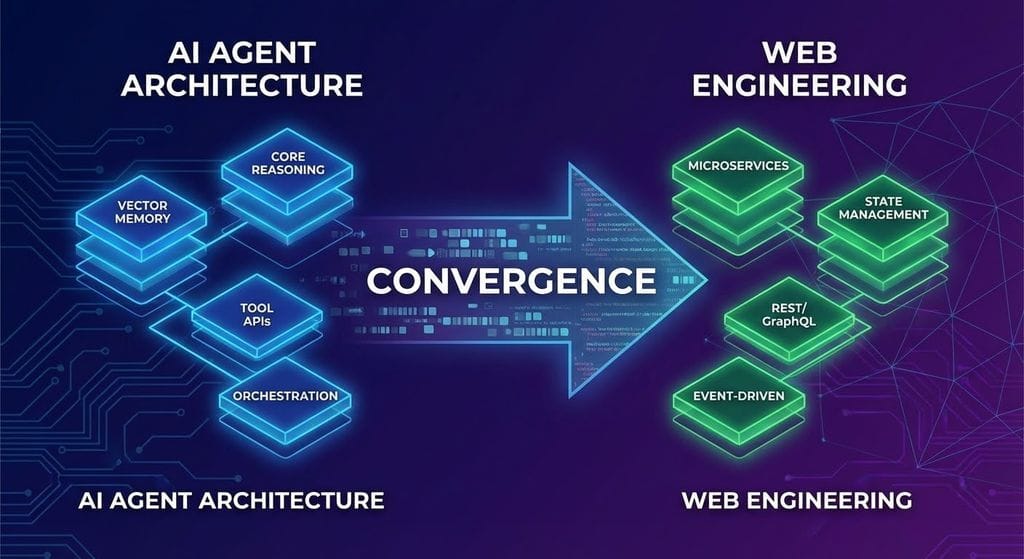

Next in AI: A new analysis suggests the architecture of AI agents is evolving to mirror modern web engineering. Concepts like lazy loading and caching are being adapted to optimize token flow and performance, making agent development feel familiar to web developers.

Decoded:

Just as web developers compress assets to save bandwidth, AI developers now reduce token usage by compressing conversation history and caching frequently used prompts.

Instead of loading all capabilities at once, modern agents load tools on-demand—a direct parallel to lazy loading JavaScript modules to speed up a website's initial load time.

Security principles are also crossing over, with agents now using sandboxing code execution to safely run external tools, much like a web browser isolates untrusted code to protect users.

Why It Matters: This architectural convergence means the skills of a web developer are directly transferable to building powerful AI applications. The future of effective AI agents lies not just in the models themselves, but in the efficient, secure, and scalable systems we build around them.

AI Pulse

The Trump administration drafted an executive order to challenge state-level AI laws, planning to create a DOJ task force to sue non-conforming states and potentially withhold federal funding.

OpenAgents launched as a new open-source project for creating AI Agent Networks, providing infrastructure for agents to connect and collaborate within self-contained communities.

Hugging Face CEO Clem Delangue argued that while we may be in an "LLM bubble," the broader AI field is just beginning, predicting a shift toward more specialized, task-specific models.